Decision and Control of Complex Systems

Developing scientific machine learning methods for modeling and control of complex systems.

Abstract

The objective of this project is to develop the mathematical foundations and framework for controlling and optimizing complex systems by developing novel scientific machine learning (SciML) methods. In US Department of Energy (DOE) mission areas, the analyses, simulations, and optimizations of these complex systems have been the objectives of numerous past research efforts. In this project, we recognize the fundamental challenge of complex system modeling and control, which is that high-fidelity models can be prohibitively expensive to construct. This limitation is not necessarily due to the lack of fundamental understanding of the underlying natural phenomena but due to practical constraints such as the difficulties in instrumenting the complex systems to collect relevant data, the need to resolve inherent uncertainties in the models (e.g., due to unmodeled physics), and the loss of observability due to excessive exogenous noises. The general applicability of the current solutions is hampered by the ad hoc nature of the approaches and the lack of a coherent theoretical framework. The new SciML methods developed under this project are adressing key challenges of complex systems: model construction, uncertainty quantification, decision and control, and continual learning.

The research in this project is conducted by researchers from Oak Ridge National Laboratory; Pacific Northwest National Laboratory; the University of California, Santa Barbara; and Arizona State University.

Safe Data-driven Control

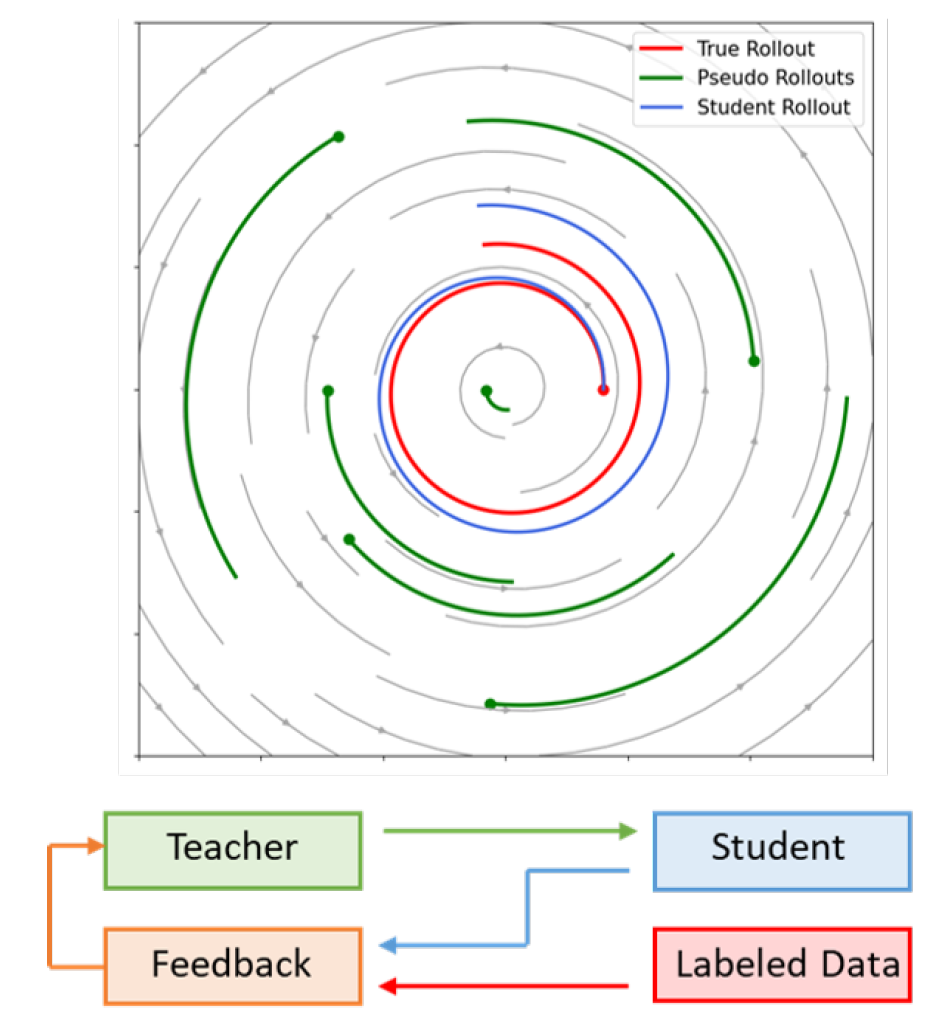

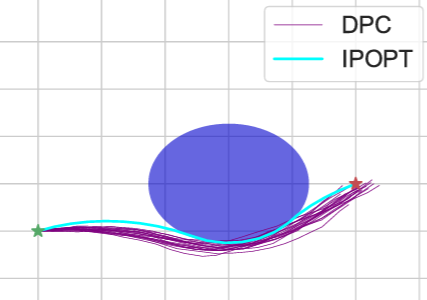

Under this project our team has developed a range of provably safe data-driven control methods based on differentiable programming paradigm. The developed methods can be used in conjunction with any learning-based controller, such as deep reinforcement learning control policy. In our case we have focused on the extensions of promissing offline model-based policy optimization approach called differentiable predictive control (DPC).

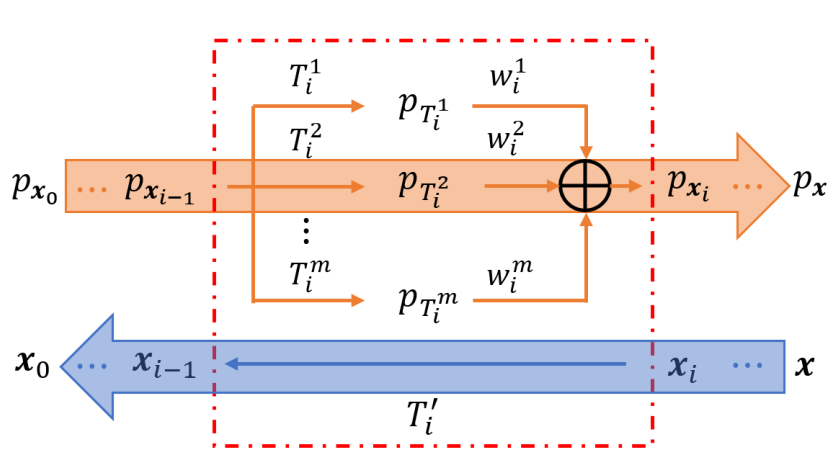

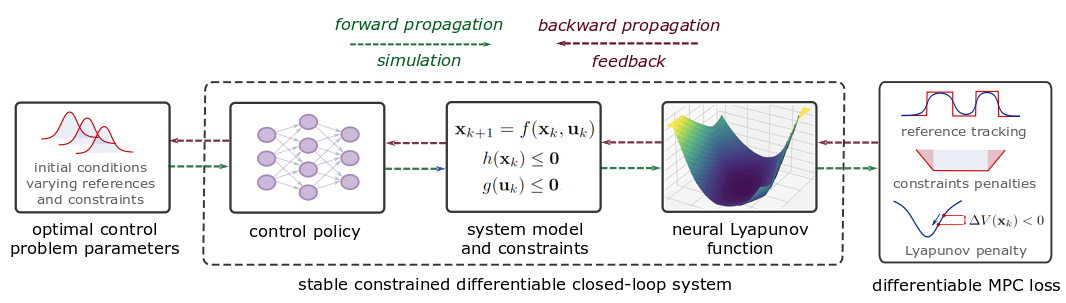

DPC is a modern SciML method that bring the benefits of both worlds. It combines model-based interpretability, constraints handling, and performance guarantees of model predictive control (MPC) with deep reinforcement learning (RL) policy optimization. This synnergy allows DPC to learn control policy parameters directly by backpropagating MPC objective function and constraints through the differentiable model of a dynamical system. Instances of a differentiable model can include a family of physics-based and data-driven differential equations models, such as neural ODEs, universal differential equations (UDEs), or neural state space models (SSMs).

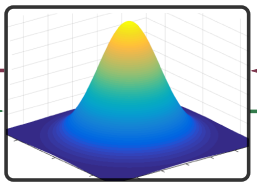

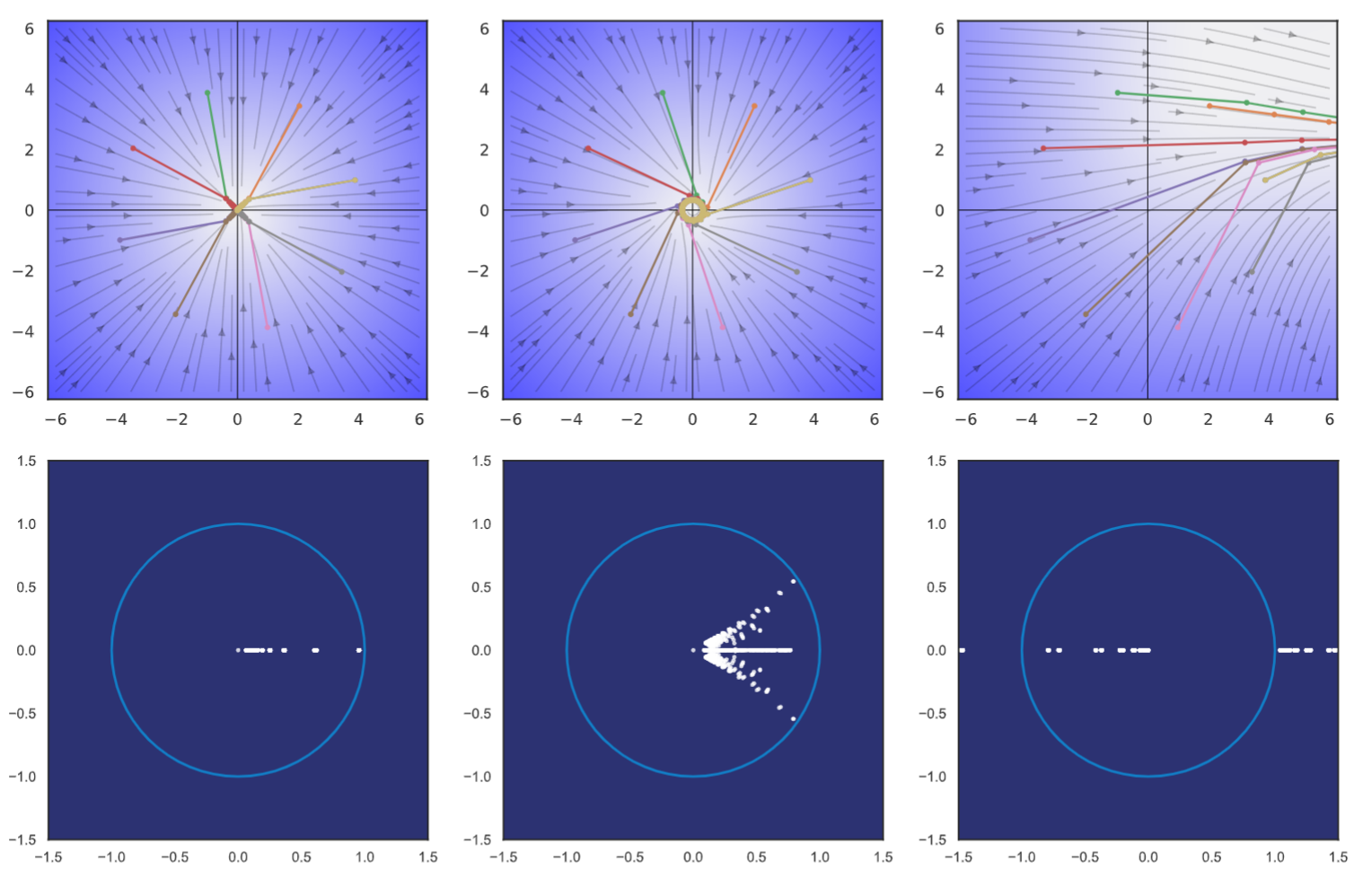

Neural Lyapunov Differentiable Predictive Control combines the principles of Lyapunov functions, model predictive control, reinforcement learning, and differentiable programming to offer a systematic way for offline model-based policy optimization with guaranteed stability.

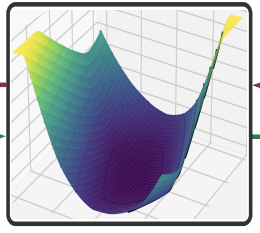

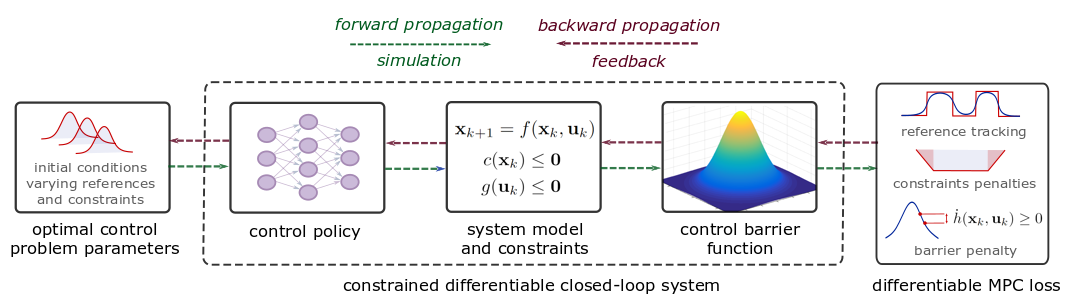

Differentiable Predictive Control with Safety Guarantees: A Control Barrier Function Approach combines the principles of control barrier functions, model predictive control, reinforcement learning, and differentiable programming to offer a systematic way for offline model-based policy optimization with guaranteed constraints satisfaction via projections onto the feasible set.

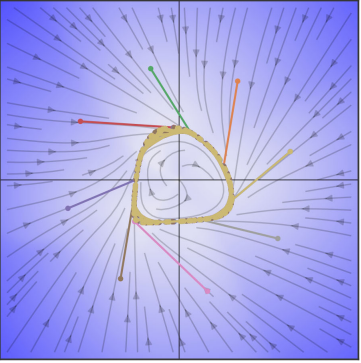

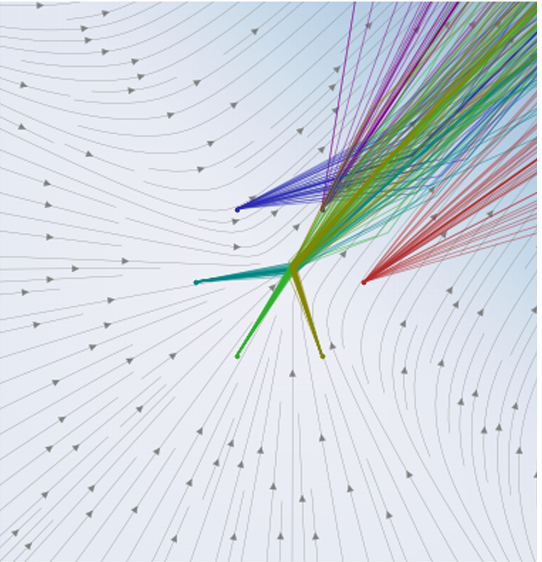

Stability analysis of deep neural models

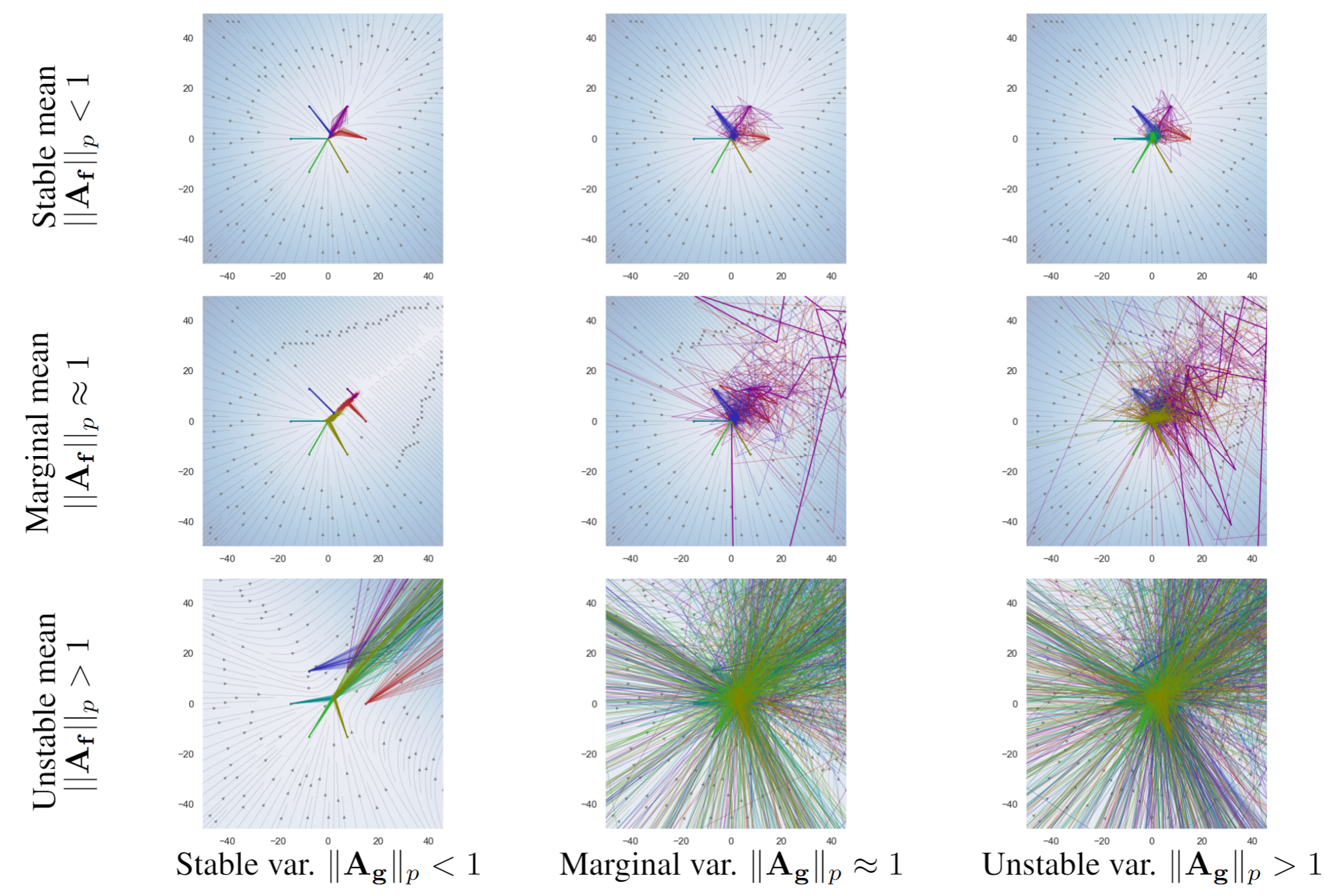

In recent years, deep neural networks (DNN) have become ever more integrated into safety-critical and high-performance systems, including robotics, autonomous driving, and process control, where formal verification methods are desired to ensure safe operation. Stability is one of the properties of dynamical systems with paramount importance for safety-verified control systems applications. To address these issues we provided sufficient conditions for i) dissipativity and ii) stochastic stability of discrete-time dynamical systems parametrized by DNNs. To do so we leverage the representation of neural networks as pointwise affine maps, thus exposing their local linear operators and making them accessible to classical system analytic and design methods. This allows us to “crack open the black box” of the neural dynamical system’s behavior. We make connections between the spectral properties of neural network’s weights and different types of used activation function on the stability and overall dynamic behavior of these type of models. Based on the theory, we propose a few practical methods for designing constrained neural dynamical models with guaranteed stability.

Dissipative neural dynamical systems: For more information read the paper

Stable deep markov models: For more information read the paper.

Open-source Software

The technology developed under this project is being open-sourced as part of the Neuromancer Scientific Machine Learning (SciML) library developed by our team at PNNL.

PNNL Team

- Mahantesh Halappanavar (PI)

- Ján Drgoňa (Task lead)

- Wenceslao Shaw Cortez

- Sayak Mukherjee

- Sam Chatterjee

- Vikas Chandan

Acknowledgements

This project was supported through the U.S. Department of Energy (DOE), through the Office of Advanced Scientific Computing Research’s “Data-Driven Decision Control for Complex Systems (DnC2S)” project. PNNL is a multi-program national laboratory operated for the U.S. DOE by Battelle Memorial Institute under Contract No. DE-AC05-76RL0-1830.